Storage

All data of LinDB will be stored on the local disk, and there are different storage structures according to different data types:

- Metirc Metadata: store Metric Name and its underlying Fields/Tag Keys;

- Tags(Series) Index: store all Tags under a Metric, this part is divided into 2 data types;

- Forward: Tags corresponding to Series ID;

- Inverted: The inverted index of Tag Key/Value, where Series ID are binding to Tag Value. Series ID set is compressed stored by RoaringBitMap ;

- Data: Storage of Data Point under all Time Series;

All the above data types are stored in a common underlying KV Store.

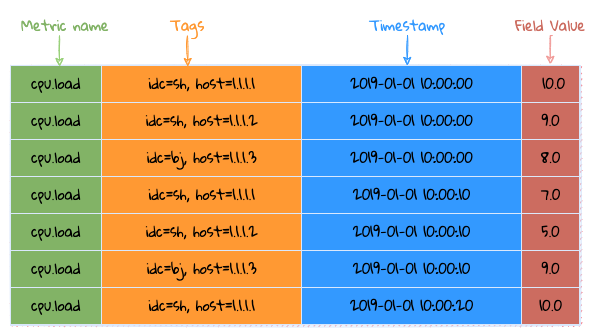

Time series characteristics

Before talking about storage, let's talk about the characteristics of time-series, as shown in the figure:

Time series characteristics

Time series characteristics

Time series data characteristics (according to its time characteristics, it can be divided into time-invariant and time-variant data)

- Time Series => Metric + Tags: This part of the data is basically a string, and this data occupies the bulk of the data packet, but it will not vary with time flows. Converting these strings into numerical values for storage to reduce storage costs;

- Fields: This part of the data is basically numeric and changes with time, but the numeric type is easy to compress;

Storage structure

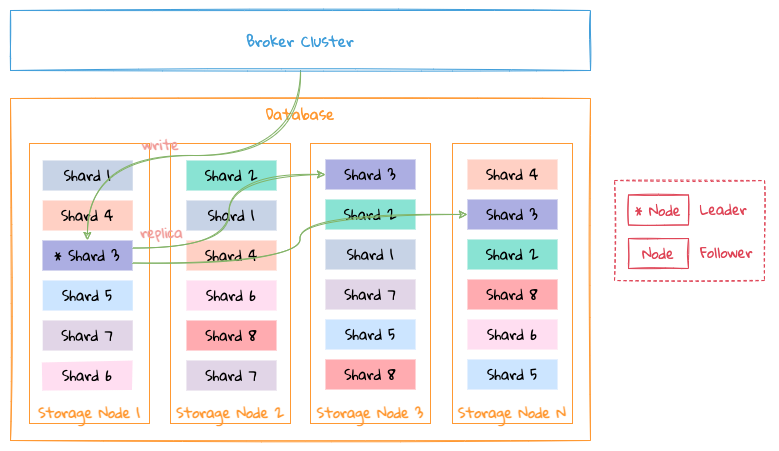

Database

storage database

storage database

- The data of a database are distributed on different nodes of the Storage cluster according to Shard;

- A Shard can have multiple replicas, please see Replication for details;

Shard

├─ db_test_1

| ├─ meta

| | ├─ namespace

| | ├─ metric

| | ├─ field

| | ├─ tagkey

| | └─ tagvalue

| └─ shard

| ├─ 1

| | ├─ inverted

| | ├─ forward

| | └─ segment

| | ├─ day

| | | ├─ 20190101

| | | | ├─ 01

| | | | ├─ 02

| | | | └─ 23

| | | └─ 20190102

| | ├─ month

| | | ├─ 201901

| | | | ├─ 01

| | | | ├─ 02

| | | | └─ 31

| | | └─ 201902

| | └─ year

| | ├─ 2019

| | | ├─ 01

| | | ├─ 02

| | | └─ 12

| | └─ 2020

| └─ 2

└─ db_test_2

The storage directory above describes how data are stored on a Storage node.

Taking the data structure of a single database on a single node as an example:

- meta: store all metadata, at database level:

- namespace

- metric

- field

- tag key

- tag value

- shard: A database will have multiple shards on a single node, and each shard has the following data:

- index: store forward and reverse indexes;

- segment: store the data of each time slice;

See Invertd Index for the structure of meta and index.

All data are divided into different parts by the Interval of the database to store specific data in time slices:

- The time series has strong time correlation, which makes it easier for querying;

- Convenient for handling TTL. If the data expires, just delete the corresponding directory directly;

- There are multiple segments under each shard, and each segment stores the data of the corresponding time slice according to the corresponding interval;

- Why are there many data families stored according to interval under each segment? This is mainly because the main problem solved by LinDB is to store a large amount of monitoring data. The general monitoring data is basically written at the latest time, and basically no historical data will be written. The data storage of the entire LinDB is similar to the LSM method, in order to reduce the merge operation between data files which leads to write amplification. then the re-sharding policy of segment time slice is adopted.

The following is an example of interval being 10s:

- segment is stored by day;

- Each segment is divided into data family by hour, one family per hour, and the files in each family store specific data in columns;

KV store

└─ kv_store_1

├─ CURRENT

├─ LOCK

├─ MANIFEST-000010

├─ OPTIONS

├─ family_1

| ├─ 000001.sst

| ├─ 000002.sst

| ├─ 000004.sst

| └─ 000008.sst

├─ family_2

| ├─ 000011.sst

| ├─ 000012.sst

| ├─ 000014.sst

| └─ 000018.sst

└─ family_3

The whole KV Store is similar to LSM, but it is different from LSM. The main differences are as follows:

- There is no Memory Table, because the whole system has a Memory Database to store all recently data, and these data will be directly written to the KV Store later;

- Key's type is uint32, because all string will be converted to uint32 according to the timing characteristics, so the underlying KV Store is directly designed as an uint32 => binary structure;

The directory structure is very similar to rocksdb and supports column family.

- CURRENT: record the currently valid MANIFEST file;

- LOCK: file lock to prevent multiple processes from opening the same KV Store;

- MANIFEST: change log of all sstable changes, including some change log of sequence, etc.;

- OPTIONS: KV Store configuration information, including configuration information at each column family level;

- KV Store can store multiple column families, and each family stores multiple sstable files;

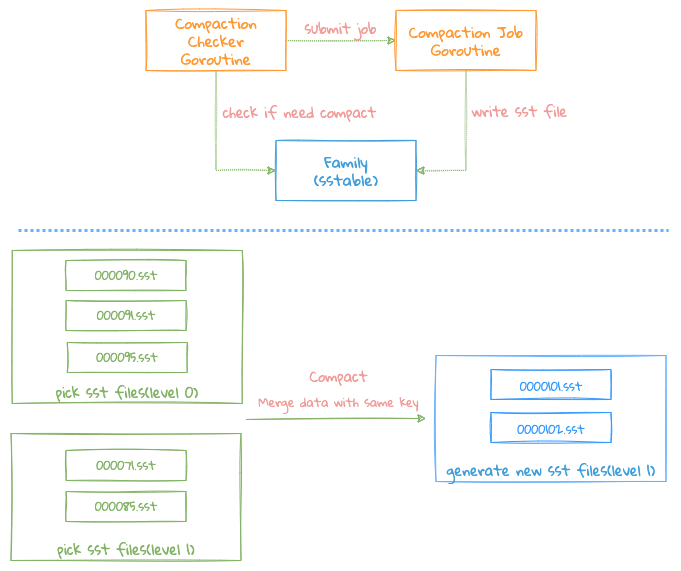

Compaction

Compaction

Compaction

- Each KV Store will start a Goroutine periodically to Check whether there are too many files in each Family Level 0 and meet the conditions of Compaction;

- When the conditions are met, the corresponding Family will be notified to execute Compaction Job, if another Compaction job is being executed, this operation will be ignored, all operations will only be done in one Goroutine,of which the benefit is lock-free , because Compaction Job is a very heavy operation, and if locking is required, it may affect the writing of new files

Compaction mainly merges the files in Level 0 into Level 1. Currently, LinDB has only 2 Level, which is different from LevelDB.

Compaction condition: any one of the following conditions will trigger the Compact task:

- the number of files in Level 0 exceeds the number of files configured;

There are 2 merge process:

- Directly moving the files of Level 0 to Level 1, but the conditions of compact should be met: the number of files of pick files from level 0 is 1, the number of files of pick fiels from level 1 is 1 The number of files is 0, that is, only one file needs to be merged, just modify Metadata;

- Need to combine multiple files into one large file;

The Compact process is as follows:

- Select the file that needs Compact, firstly select the current level 0 file, then traverse each level 0 file, press key range to get the overlapping file from level 1, why is here? Fetch level 1 files by each level 0 file, not by the final key range in level 0. Because the keys of the entire level 0 file may be relatively hashed, so if we take the final range, we may get a large range. E.g:

for example:

Level 0:

file 1: 1~10

file 2: 1000~1001

Level 1:

file 3: 1~5

file 4: 100~200

file 5: 400~500

- If we press the final range of level 0, 1~1001, this will take out all the files of level 1

- If we take it according to the range of each level 0, we only need to take the file 3 (1~5) in the end.

- The entire compact process is actually a multi-way merge process. Since the key is sorted when flush writes files, compact only needs to read each file in order, and press ** The order of key** can traverse the data from these files;

- During the process, the data with the same key needs to be merged. The merging process needs to be merged according to different data types, and the Merger Interface needs to be implemented;

- If key does not need the merge operation, write the corresponding data directly to the file, which can reduce unnecessary serialization operations;

- Once compact merging and writing files is successfully completed, submitting Version Edit to Version Set will be taken. At this time, Version Edit includes newly written files and old files that need to be deleted. This process requires locks;

- Delete those useless files: such as files that have been merged, or some intermediate result files after failure. It should be noted here that the cleanup operation must be done after the Version Edit is successfully submitted to the Version Set, otherwise which may leads to data file confusion;

TIP

- Version Edit: Similar to LevelDB, a Version Edit will be recorded for each file write operation, and Version Edit will record new files/deleted files, so that when the system restarts or crashes, just reload the Version Edit Log again. There are those that mention the whole useful document;

- Version Set: record which files are currently stored and available;

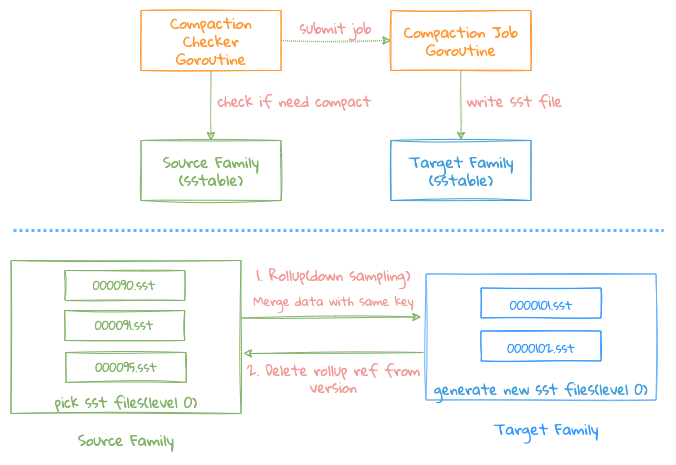

Rollup

Rollup

Rollup

Rollup Job is a special Compact Job, which mainly deals with data reduction (DownSampling), namely 10s->5m->1h, its core logic is same as Compaction Job, the main differences are as follows :

- Source Family merges data into Target Family to operate 2 Family;

- After the merging is completed, delete the files that need Rollup in Source Family Version, and record the files that have been Rollup in Target Family Version to prevent duplicate data merging;

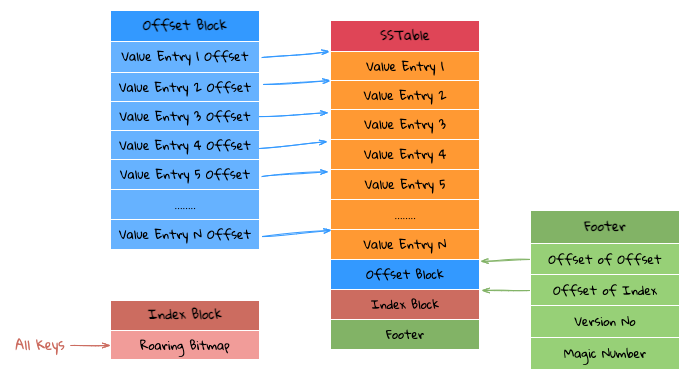

SSTable layout

SSTable

SSTable

The structure of each SSTable is shown in the figure above, and it mainly has the following components:

- Footer Block: Storing Magic Number(8 Bytes) + Version(1 byte) + Index Block Offset(4 bytes) + Offset Block Offset(4 bytes), which can be accessed through both Index Block Offset and Offset Block to read contents of Index Block and Offset Block;

- Index Block: Using Roaring Bitmap to store all the Keys in the current SSTable file, as all Key are uint32, so we can directly use Roaring Bitmap to store, such benefits is that we can use Roaring Bitmap to determine whether a Key exists, and at the same time we can also know the position of this Key in this Roaring Bitmap;

- Offset Block: store all the Offset of Value Entry, and each Offset is stored with a fixed length, so if it is found in the Nth position in the Index Block, The Offset of that Value Entry is the data pointed to by N * Offset Length;

- Value Entry: store the Value corresponding to each Key/Value, because the Key is already stored in the Index Block, so the Value Entry only needs to store the Value in the Key/Value;

The advantage of this is that the compression of Key can be done well, and Roaring Bitmap has done a lot of optimization on Bitmap, Get data through Key is very efficient, because it is not like LevelDB, binary searching Key in the middle process are not required, and the Bitmap can be resident in memory.

Based on the above storage structure, the entire query logic is as follows:

- Through Index Block, we can directly know whether the queried Key exists. If it does not exist, return it directly. If it exists, get the first position (Index) in the Bitmap;

- First jump: According to the Index obtained above, in Offset Block, after skipping Index * Offset Length, we can get Value Entry Offset(Position);

- The second jump: According to the Position obtained above, skip the Position at the beginning of the file and then the desired Value can be read directly;

If we want to do the Scan operation, just directly read the sequence based on the Index Block and Offset Blcok.